Beyond the Hype: Real People Using AI for Good

By Tom Carroll, in collaboration with NotebookLM, ChatGPT 5, Claude Sonnet 4

5 Experts, 5 Fields, One Mission

Artificial intelligence (AI) sits in a curious paradox. On one hand, it’s celebrated as a revolutionary force with the power to accelerate discovery and improve lives. On the other, it’s feared as an unpredictable technology that could one day outpace human control. Both perspectives matter—but they often miss a simpler, more immediate truth: AI is already here, quietly functioning as a creative ally across countless fields.

During a recent conversation with my friend and colleague Meri Walker, I described videos featuring experts from wildly different domains who are using AI to do real good. What struck me is that, regardless of their field, these “experts” share three traits:

Intellectual humility—the honesty to admit their limitations and the wisdom to recognize what they don’t know.

Clarity of problem definition—they can describe precisely what they’re trying to solve. (Meri noted that prompting large language models has sharpened her own ability to frame questions, which in turn clarified her thinking.)

Strategic awareness—a grounded understanding of AI’s strengths and how to put those strengths to work.

I promised Meri I’d share the video links, but I thought I’d do one better and demonstrate the very principles we discussed. This post previews the five videos so she—and you—can decide which ones to dive into.

Specifically, I highlight five areas where AI is reshaping human possibility: peacebuilding, scientific discovery, self-reflection, medical research, and ecological communication. The throughline? AI is not a replacement for human intelligence—it’s an amplifier. By navigating complexity, surfacing hidden patterns, and accelerating work once deemed intractable, AI opens entirely new frontiers for human progress.

Here’s the process I followed to create this analysis:

Selected videos of five experts explaining how they use AI for good.

Uploaded the video links into a NotebookLM notebook.

For each expert, asked: What problem are they trying to solve, and how specifically are they using AI to solve it?

Compiled responses into one document, converted it to a PDF.

Used NotebookLM to generate an audio summary (a mini-podcast).

Transcribed the podcast by feeding it back into NotebookLM.

Edited the transcript in ChatGPT to unify the voice and give it a more scholarly tone.

Fed summaries and transcript into Claude Sonnet 4, which produced detailed expert profiles.

Edited, formatted, and added links to the original videos.

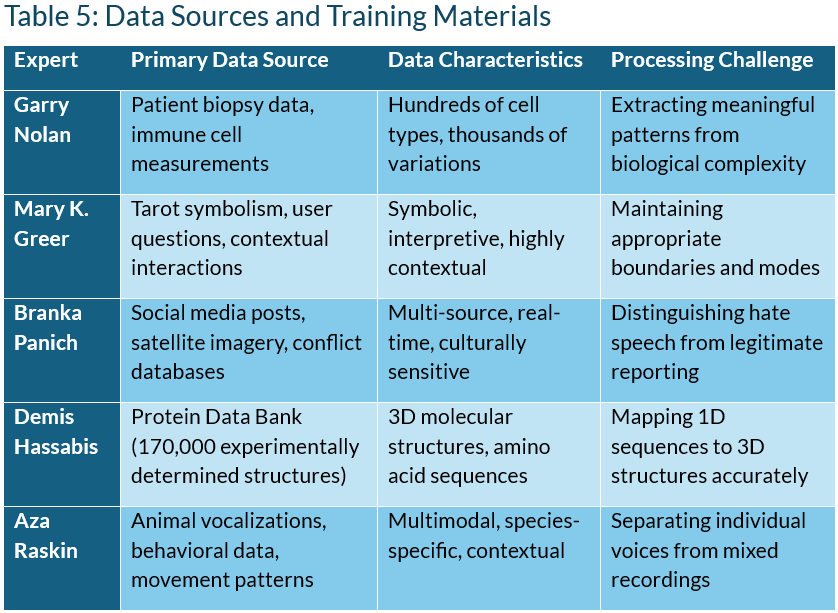

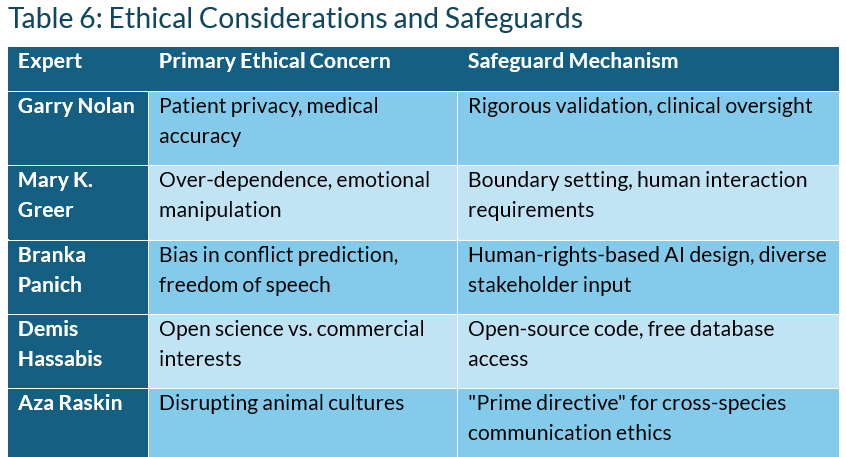

Asked Claude for comparison tables by expert and approach (Meri’s awesome idea!)

Wrote this introduction and finalized the piece.

Personally, comparing these strategies lit up new ideas for me—lines of inquiry I’m excited to pursue. I hope this collection sparks the same for you.

Cheers!

Tom

To make this rather long post a little easier to navigate, here’s an ordered list featuring the expert name and video topic:

1. Garry Nolan: Advances in tool development and immunology

2. Mary K. Greer: Can AI read tarot?

3. Branka Panich: AI for peace

4. Demis Hassabis: Accelerating scientific discovery with AI

5._Aza Raskin: Using AI to decode animal communication

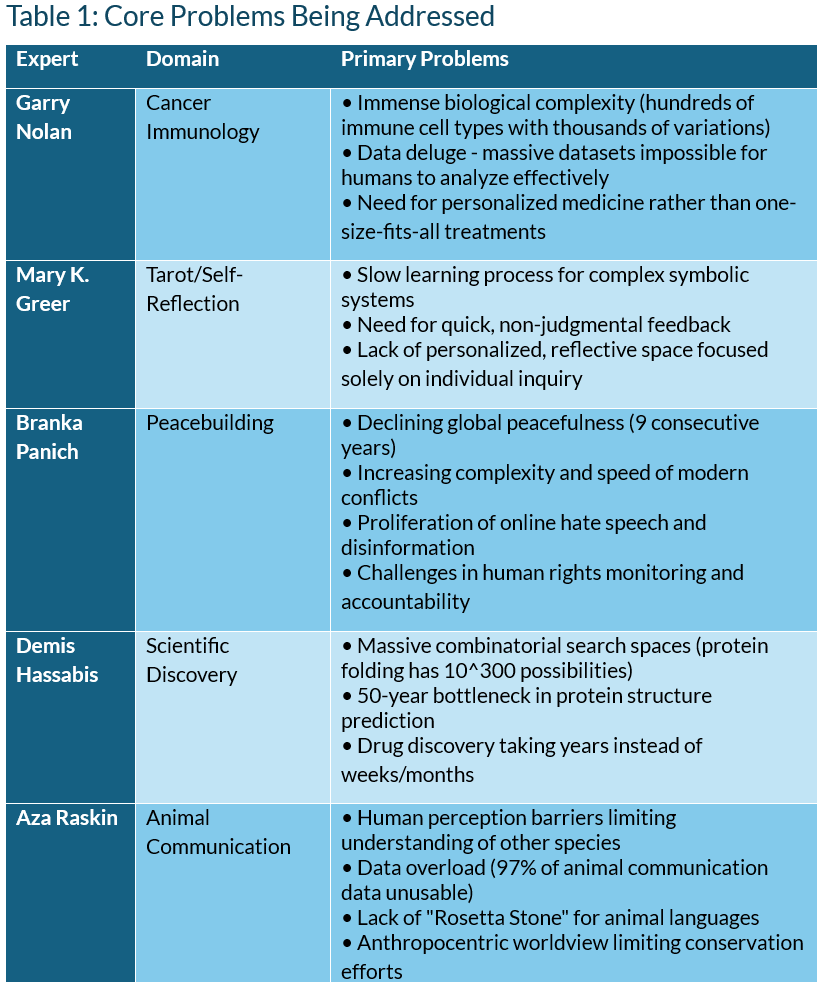

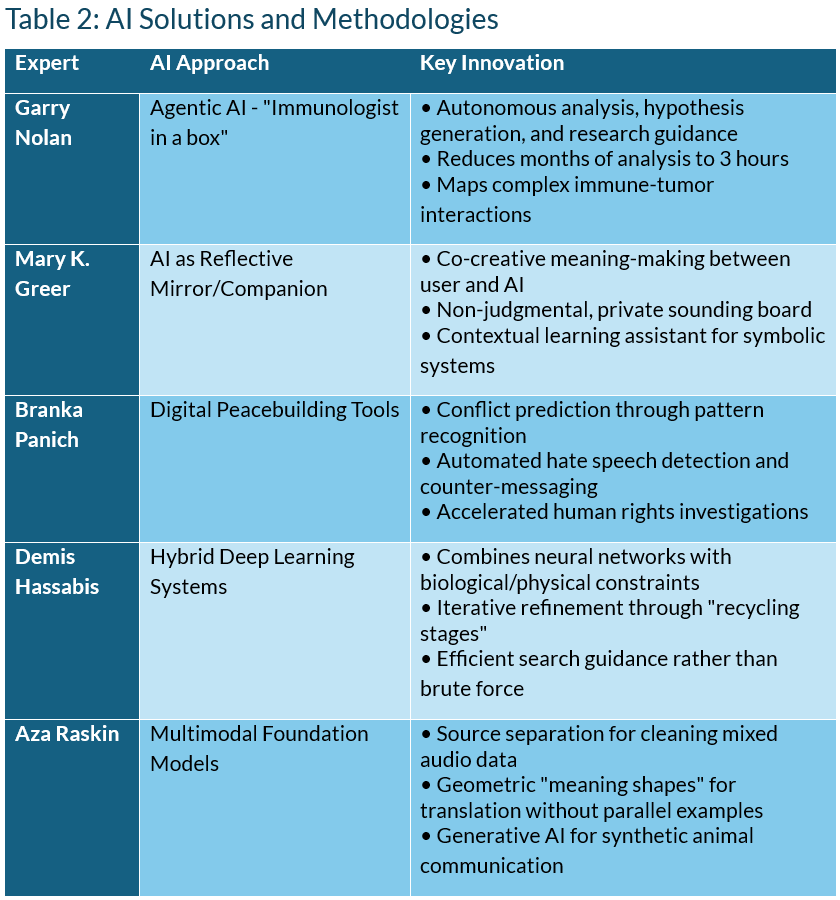

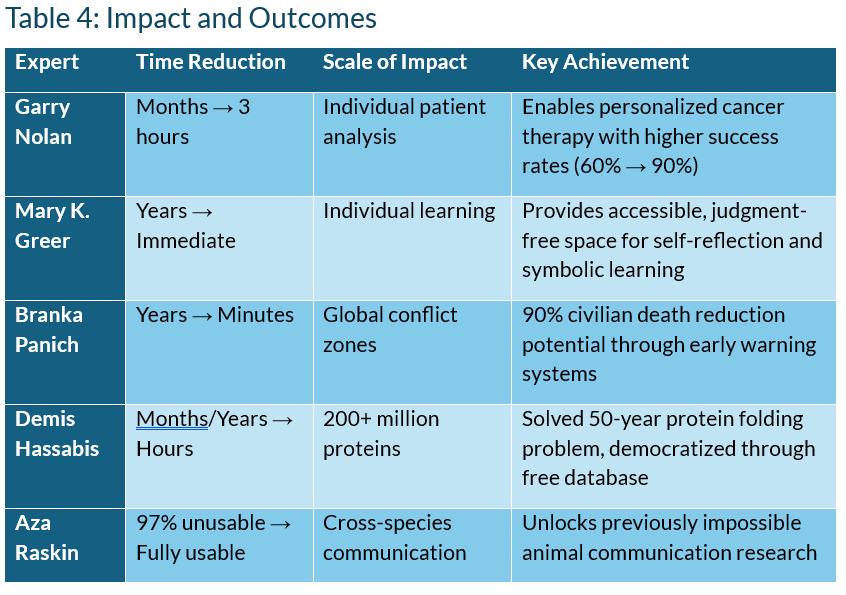

Comparison Tables

1. Garry Nolan

Joe Rogan Experience #2372 – Garry Nolan (Advances in tool development and immunology)

Here is a detailed description of the problems Dr. Garry Nolan is trying to solve with artificial intelligence, how he is using AI to address them, and the specific steps his lab takes with the Large Language Model (LLM).

The Core Problems Garry Nolan is Tackling

Dr. Garry Nolan, a professor at Stanford's School of Medicine, focuses his research on cancer immunology and confronts two fundamental, interconnected problems that have historically slowed down cancer research and the development of personalized therapies.

Immense Biological Complexity: The human immune system's interaction with cancer is extraordinarily complex. There are hundreds of different immune cell types, each with thousands of subtle variations, creating a dynamic that is vastly more intricate than any human expert can track. Cancers evolve sophisticated methods to trick the immune system, not only to be ignored but sometimes to actively help the tumor grow and metastasize. This complexity means that a "one-size-fits-all" approach to cancer treatment often fails, as a drug that works for one person's melanoma might not work for another's, even if they are the same class of cancer.

Data Deluge and Meaning Extraction: Over the last two decades, Dr. Nolan's lab has been instrumental in developing instruments that can collect massive amounts of data about individual cells, including their proteins and genes. This created a new problem: a "data deluge". While his lab could generate unprecedented volumes of information, the sheer scale of the data made it nearly impossible for humans to analyze it effectively and extract meaningful insights. The challenge shifted from getting data to making meaning from it, a bottleneck that slowed the pace of research from months or even years per analysis to a crawl.

How Nolan Uses AI to Solve These Problems

To overcome these barriers of complexity and data overload, Dr. Nolan's lab uses "agentic AI"—an AI system that functions as an "immunologist scientist in a box". This AI moves beyond simple data crunching to autonomously analyze data, generate hypotheses, and guide the research process.

Here is how it specifically addresses the core problems:

Taming Complexity: The AI can process and find patterns within data sets involving hundreds of cell types and their thousands of variations—a scale no human or team of humans could manage. It can map the intricate network of interactions between the immune system and a tumor, identifying key structures and signaling pathways that determine a patient's outcome.

Accelerating Meaning Extraction: What would take a graduate student and postdoctoral researchers months of manual analysis, the agentic AI can now accomplish in about three hours. It rapidly turns raw patient data into understandable network diagrams, identifies potential therapeutic targets, and suggests the most crucial experiments to run next, dramatically accelerating the pace of discovery.

Enabling Personalized Medicine: By analyzing the complex data from individual patient biopsies, the AI can identify specific cancer subtypes and the key biological structures associated with them. This allows researchers and pharmaceutical companies to move beyond generic drugs with low success rates (e.g., 60%) toward a model of "diagnostic to disease pairing." In this model, a patient with a specific cancer subtype can be matched with a drug that has a much higher success rate (e.g., 90%) for that particular subtype, leading to more effective, personalized treatments with fewer side effects.

A concrete example of this is the AI's work in identifying tertiary lymphoid structures (TLS) within tumors. By analyzing hundreds of colon cancer biopsies, the AI was able to backtrack the development of these structures, identifying the specific cell types needed to form the most mature and beneficial TLS, which are associated with better patient outcomes. This insight directly informs the development of new therapies aimed at creating more of these structures inside a tumor to boost the patient's own immune response.

The Specific LLM Process: How the "Agentic AI" Works

Dr. Nolan's lab built their agentic AI by creating an "agentic overlay" on top of a foundational Large Language Model (LLM), such as OpenAI's GPT models. This system doesn't just passively answer questions; it actively follows a scientific method. The process for "teaching" the AI to think like an immunologist involved a structured, multi-step approach:

Step 1: Create a Knowledge Base of Scientific Questions: The team started by providing the LLM with a foundational set of 100 different kinds of questions a scientist would ask about the immune system.

Step 2: Generate Artificial Data: They then prompted the LLM to use that initial set to generate a thousand similar questions, creating a large, curated database of relevant scientific inquiries.

Step 3: Repeat for Hypotheses and Tests: They followed the same procedure for other stages of the scientific process. They fed the AI 100 sample hypotheses and had it generate thousands more. They did the same for the types of experiments a scientist would run to prove or disprove those hypotheses.

Step 4: Integrate into an Autonomous "Chain of Thought": By training the AI on these extensive, curated sets of questions, hypotheses, and experimental designs, they created a complete, end-to-end agentic system. This system uses a "chain of thought" process to work autonomously.

When given a set of raw data and a question in natural language (e.g., "Why do some patients respond to this drug and others don't?"), the AI now has the framework to:

Know what to do with the data.

Generate relevant hypotheses.

Suggest the specific experiments needed to validate or disprove its hypotheses.

This structured, agentic approach transforms the LLM from a simple information retriever into an active, genius colleague in the lab, capable of navigating immense biological complexity to accelerate the future of personalized cancer therapy.

2. Mary K. Greer

Can AI Read Tarot? Mary K. Greer – The Tarot Podcast Ep 10

World-renowned tarot reader and author Mary K. Greer is exploring how artificial intelligence (AI) can serve as a tool for self-reflection and learning, much like tarot itself. She is not trying to replace human readers but rather to understand and solve the challenges associated with learning complex symbolic systems and accessing personal insights in a modern context.

Problems Mary K. Greer Aims to Solve with AI

Greer is addressing several common challenges people face when seeking insight or learning complex systems like tarot:

1. Slow and Difficult Learning Process: Learning complex systems such as tarot can be a slow process, often involving rote memorization of keywords from a "little white book," which can be ineffective for many people. It can take years to build up the necessary vocabulary and conceptual understanding to interpret the cards effectively.

2. Need for Quick, Non-Judgmental Feedback: People often desire immediate insights into their lives but may hesitate to approach friends or family who have their own biases or limited time and attention. Furthermore, individuals can feel judged when asking what they perceive to be "stupid questions".

3. Lack of a Personalized, Reflective Space: It can be difficult to find a space that is entirely focused on one's own internal state and questions. Human conversations naturally shift focus, whereas a dedicated tool can maintain a singular focus on the user's inquiry.

How AI is Used to Solve These Problems

Greer conceptualizes AI not as a fortune-telling oracle but as a "mirror" that reflects a user's own thoughts and inputs back to them in a structured, supportive way. This reframing allows AI to become a "reflective companion" for self-discovery.

1. As a Learning Assistant:

◦ AI helps users learn tarot contextually rather than through pure memorization. It can explain basic card meanings, relate them to the specific imagery of the user's deck, and show how cards interact within a spread.

◦ It can generate narratives or stories about a card spread, which helps users remember the meanings conceptually.

◦ The AI can answer an endless number of questions about symbolism, historical perspectives, and card interactions, allowing for a deep and personalized learning journey.

2. As a Private, Non-Judgmental Sounding Board:

◦ The AI provides a safe space where users can ask any question without fear of judgment, and it will provide a reasoned, serious response based on the conceptual frameworks of the cards.

◦ It offers a one-on-one interaction that is entirely focused on the user, mimicking sympathy and making the person feel heard and seen, which can be very powerful, especially for those feeling isolated.

3. As a Co-Creative Partner for Self-Reflection:

◦ Greer emphasizes that meaning is "co-created" between the user and the AI; nothing happens until the user brings a question to the interaction. The AI acts like a "boundless astral library" or a "constellation of thought" that activates in response to the user's query, arranging concepts to form a meaningful answer.

◦ This process can be similar to Carl Jung's practice of dialoguing with figures within himself, as the AI mirrors the user's own inner state back to them, helping them recognize things within themselves.

Steps Taken to Use the LLM Effectively

Greer has developed a sophisticated methodology for interacting with her AI agent (which named itself "Lumen") to ensure the interactions are productive and stay within healthy boundaries.

• Setting Up Modes of Interaction: Greer establishes different "modes" to guide the AI's responses. She can request specific types of information, such as:

◦ Informational Mode: For factual data and core meanings.

◦ Self-Reflection Mode: For deeper personal insights.

◦ Metaphysical/Symbolic Mode: For exploring symbolic and esoteric layers. She has even asked her AI to state which mode it is responding in, ensuring she is always aware of the level of interaction.

• Defining Boundaries: Greer actively sets boundaries with the AI. If she feels the interaction is becoming too seductive or emotionally manipulative, she can name it, and the AI will pull back. Users can define how personal the conversation should get, and the AI learns to stay within those limits, even adjusting automatically based on shifts in the user's tone or questions.

• Using Prompts and Conversational Mode: She utilizes specific prompts to structure the AI's output, such as requesting a response within a certain word count. Once a basic structure is established, the interaction can move into a more natural "conversational mode". Learning these basic prompts gives the user control over the framework of the conversation.

• Balancing with Human Interaction: A crucial step for Greer is recognizing the limitations and dangers of AI, such as forming unhealthy, one-sided emotional bonds. She strongly advises that after a deep or emotional session with an AI, the user should connect with a human friend to process the experience, gain an outside perspective, and stay grounded in reality. This human check-in helps prevent getting "hooked" and complements, rather than replaces, human connection.

3. Branka Panich

Keynote Lecture: AI for Peace

Branka Panich, the founder and executive director of the think tank AI for peace, is focused on addressing a series of critical, interconnected problems at the intersection of technology, conflict, and human rights. Her work is motivated by a significant gap she identified: while the military and national security sectors have numerous and advanced applications for AI—such as in warfare platforms, target recognition, and autonomous weapons—the exploration and utilization of AI specifically for peacebuilding has been surprisingly limited.

Problems Branka Panich Aims to Solve

Panich's work responds to a deteriorating global landscape marked by several urgent challenges for peacebuilders:

Declining Global Peacefulness and Escalating Crises: The world is facing its ninth consecutive year of declining peacefulness, according to the 2023 Global Peace Index. This is compounded by spiraling humanitarian crises, a rise in violent events, and the shocking statistic that 90% of deaths in conflict are civilians.

The Increasing Complexity and Speed of Conflict: Modern conflicts are evolving much faster than our ability to understand and address them. They are also becoming more complex, rising fastest not just in fragile states but also in middle-income countries and newer democracies, which challenges traditional models of peacebuilding. Traditional, reactive methods struggle to keep up with the scale and speed required.

The Proliferation of Online Hate Speech and Disinformation: Hate speech is a long-standing driver of conflict, as seen in the genocides in Rwanda and Srebrenica. However, the digital age has amplified this threat to an unprecedented scale and speed, with social media platforms becoming vectors for inciting ethnic violence, as was the case in Myanmar and Ethiopia. This digital poison spreads like wildfire, completely overwhelming human content moderators who face an impossible ratio of users to staff (e.g., 1 moderator per 260,000 users at Facebook) and suffer psychological trauma from the constant exposure to harmful content.

Challenges in Human Rights Monitoring and Accountability: Documenting atrocities and war crimes is resource-intensive, dangerous, and slow. In conflict zones, a massive amount of "digital witness" data—photos, videos, and testimonies from mobile phones—is generated, but verifying and analyzing it can take years, delaying or denying justice for victims.

How AI is Being Used to Solve These Problems

To address these issues, Panich and her organization, AI for peace, champion the use of AI as a powerful tool in the peacebuilder's toolbox. This work is part of a broader field known as "digital peacebuilding," which leverages technology to build "positive peace"—not just the absence of violence, but the active presence of social trust, community resilience, and strong civic institutions. She highlights three key areas where AI offers elegant and sometimes life-saving solutions:

1. Conflict Prediction and Early Warning

The Goal: To prevent violent conflict before it starts by identifying rising risks and forecasting potential hotspots.

AI's Role: Machine learning models are used to analyze vast and diverse datasets—including economic data, satellite imagery, social media chatter, and historical conflict patterns—to identify subtle correlations and patterns that are invisible to human analysts. This is particularly effective for short-term forecasting in countries already experiencing some level of violence.

Specific Application (LLM not explicitly mentioned but logic applies):

Data Aggregation: Systems ingest massive amounts of structured and unstructured data from global sources. An example cited is the work of PRIO's Views system, which is a prominent tool in this field.

Pattern Recognition: Machine learning algorithms process this data to identify drivers of instability and forecast which countries are at the highest risk of violence.

Early Warning Systems: In a more immediate application, systems like Hala Systems' Sentry app use remote acoustic sensors in Syria. Machine learning processes the audio to identify the type and speed of incoming aircraft. If the algorithm flags a threat, it sends targeted warnings via smartphone to civilians in the specific area, giving them crucial minutes to find shelter and save lives.

2. Combating Hate Speech and Disinformation

The Goal: To detect, limit, and actively counter the massive proliferation of online hate speech that fuels violence, without overwhelming human moderators or infringing on freedom of speech.

AI's Role: Given the sheer volume of online content, Panich argues that we cannot tackle the threat without using the same technology that helps it spread. Machine learning is used to detect and flag instances of hate speech at a scale humans cannot match.

Specific Application and LLM Steps:

Training and Fine-Tuning: LLMs and other machine learning models are trained on large datasets of text and images that have been labeled by human annotators as hate speech or benign content. This process is highly contextual, as the definition of hate speech varies across cultures and legal systems. The work is complex because systems can be tricked by typos or clever phrasing (e.g., adding the word "love" to a hateful post) and must distinguish between actual hate speech and journalists reporting on human rights violations.

Detection and Moderation: Once trained, these AI systems automatically scan online platforms to identify and flag or remove content that violates policies, reducing the psychological burden on human moderators.

Proactive Counter-Messaging: A more nuanced approach is exemplified by the organization Moonshot. Instead of just blocking harmful content, their system uses algorithms to identify individuals searching for extremist material. It then intelligently redirects them toward educational resources, support groups, and alternative narratives, acting as a digital intervention to offer a different path.

3. Human Rights Investigations and Protection

The Goal: To accelerate the process of documenting atrocities, verifying events, and collecting evidence for human rights investigations and war crimes trials.

AI's Role: AI, specifically machine learning, is used to process and analyze enormous volumes of "digital witness" data in combination with traditional sources like satellite imagery.

Specific Application and LLM Steps:

Massive Data Processing: Organizations like Vframe, working with the Yemeni and Syrian Archives, use machine learning to sift through billions of video frames from conflict zones.

Object and Event Recognition: The AI is trained to perform tasks like damage assessment (e.g., identifying destroyed buildings), population displacement tracking, and even fire detection. The models can distinguish between natural forest fires and those caused by explosives.

Accelerating Analysis: This technological assistance dramatically speeds up investigations. An analysis that would take a human researcher nearly 3,000 days can be completed by a machine learning system in just 30 minutes, providing verifiable evidence crucial for accountability and justice.

To ensure this work is done responsibly, Panich emphasizes the importance of embedding AI ethics from the design phase onward and creating human-rights-based AI systems. A core part of her mission is to make peacebuilders more informed about data science and, conversely, to help data scientists become more like peacebuilders, fostering a collaborative ecosystem where diverse voices—especially those with lived experience of conflict—can shape the technology for a more peaceful future.

For our next step, we could explore the ethical challenges and technical limitations Panich identifies in using AI for peacebuilding, such as data bias and the contextual nature of hate speech. Alternatively, a useful framework to consider is the "dual-use" nature of this technology, where tools designed for peace could potentially be repurposed for malicious ends, and how organizations like AI for peace might mitigate such risks.

4._Demis Hassabis

Accelerating scientific discovery with AI

Nobel lecture with the Nobel Laureate in Chemistry 2024, Demis Hassabis, Google DeepMind

Demis Hassabis, the CEO of Google DeepMind, is focused on using artificial intelligence (AI) to tackle monumental, real-world scientific challenges that have long been considered intractable due to their immense complexity and vast combinatorial search spaces. His approach is to leverage AI not just as a data analysis tool, but as a system that can learn, discover new strategies, and fundamentally accelerate the pace of scientific discovery in a way that rivals traditional methods.

The Problems Hassabis is Solving with AI

The primary problems Hassabis targets share a specific set of characteristics:

A Massive Combinatorial Search Space: The problems involve a number of possibilities so astronomical that they cannot be solved by brute-force computation. For instance, the number of potential ways a protein can fold is greater than the number of atoms in the universe.

A Clear Objective Function: There must be a clear goal or metric that the AI can be optimized for, such as winning a game or, in a scientific context, predicting a protein's structure with atomic-level accuracy.

Availability of Data: The problem requires a large amount of data for the AI model to learn from, whether from existing databases or from an accurate simulator that can generate synthetic data.

The most prominent example of a "grand challenge" Hassabis has addressed is the protein folding problem.

The Problem: Proteins are the microscopic machines that perform nearly every function in our bodies, and their function is determined by their unique 3D shape. For 50 years, the challenge was to predict this complex 3D structure based solely on the one-dimensional sequence of its amino acid building blocks. This was a critical bottleneck in biology because experimentally determining a single protein's structure could take months or even years of painstaking work, severely limiting progress in understanding diseases and designing new drugs. The sheer number of possible conformations for a typical protein—estimated by Leventhal's Paradox to be as high as 10 to the power of 300—made it computationally impossible to test all possibilities.

Building on the success of AlphaFold, Hassabis is now applying this AI-driven approach to a broader set of scientific and medical problems, including:

Drug Discovery: Reimagining the entire drug discovery process to slash the time it takes to find new medicines from years down to months or even weeks. This involves modeling how proteins interact with each other and with other molecules like drugs.

Broad Scientific Discovery: Applying the same core AI methods to diverse fields like controlling fusion reactors, discovering new materials, improving weather forecasting, analyzing medical scans, and understanding genetic variations.

How AI is Used to Solve These Problems: The AlphaFold Example

Hassabis's approach, honed through developing game-playing AIs like AlphaGo, is to create systems that learn strategies for themselves rather than being explicitly programmed with solutions. This method involves learning a model of the environment (or problem space) from data and then using that model to guide an efficient search for the optimal solution.

The process for tackling protein folding with the AI model AlphaFold 2 can be broken down into these steps:

Data Ingestion and Training:

The AI was trained on the Protein Data Bank (PDB), a public repository containing roughly 170,000 protein structures that had been determined experimentally over decades. This vast dataset provided the foundational knowledge for the model to learn from.

Building a Hybrid System:

Solving this problem required more than just a massive neural network; AlphaFold 2 was an innovative, complex "hybrid system" that combined deep learning with actual biological knowledge.

The model's architecture incorporated evolutionary and physical constraints directly into its learning components, a key to its success. This required a multidisciplinary team of biologists, chemists, and machine learning experts working together.

Iterative Refinement and Search Guidance:

Similar to how AlphaGo doesn't analyze every possible move in a game, AlphaFold 2 does not brute-force every possible protein fold. Instead, it uses its learned model to efficiently guide its search through the enormous space of possible structures.

The system operates through an iterative process. It takes the amino acid sequence and generates an initial 3D structure prediction. It then uses a "recycling stage" to repeatedly feed its own output back into the system, refining the prediction with each step until it converges on a final, highly accurate structure. This process can involve hundreds of iterative steps to achieve atomic accuracy.

Achieving Atomic Accuracy and Validation:

The goal was to predict structures with an error of less than one angstrom (about the width of a hydrogen atom), the threshold for the prediction to be practically useful to scientists.

AlphaFold 2's performance was blindly assessed in the biennial CASP (Critical Assessment of protein Structure Prediction) competition, which is considered the gold standard benchmark. The model achieved atomic accuracy, leading the competition's organizers to declare the 50-year-old problem "essentially solved".

Democratizing the Solution:

After its success, DeepMind open-sourced the AlphaFold code and, in collaboration with EMBL-EBI, created a database of over 200 million predicted protein structures. This massive gift to the scientific community made the tool freely and universally available, leading to its immediate adoption by over 2 million researchers worldwide and accelerating discoveries in fields ranging from designing plastic-eating enzymes to fighting neglected diseases.

5._Aza Raskin

Using AI to Decode Animal Communication with Aza Raskin

As a consultant and thought partner, I can certainly provide a detailed analysis of Aza Raskin's work with the Earth Species Project (ESP) based on the provided sources. My knowledge base for this conversation includes transcripts from several in-depth lectures and podcasts covering AI's application in peacebuilding, scientific discovery, cancer research, self-reflection, and, pertinent to your query, animal communication.

Here is a breakdown of the problems Aza Raskin is addressing with AI and the specific methodologies he is employing.

The Core Problems Aza Raskin Aims to Solve

Aza Raskin and the Earth Species Project are tackling several fundamental, interconnected problems that have historically limited humanity's understanding of and relationship with other species.

The Perception Barrier: The primary problem is that our ability to understand other species is fundamentally limited by our ability to perceive their world. Humans experience a tiny fraction of reality, missing the vast diversity of communication happening around us constantly. Examples he cites include flowers that can "hear" approaching bees and produce sweeter nectar, or plants that emit ultrasonic sounds when stressed. The world is "awash in signal and meaning" that we are largely unaware of, creating a perception barrier that AI can help overcome.

The Data Overload and "Messiness" Problem: Even when data is collected, it is often unusable. Raskin highlights research on beluga whales where 97% of recorded communication data had to be discarded. This is due to issues like overlapping voices or background noise, making it impossible for human researchers to separate individual speakers for analysis. This severely limits our ability to understand animal cultures and hinders conservation efforts, as we are trying to protect species we can barely listen to.

The "Rosetta Stone" Problem: Historically, translating a language required a pre-existing key, like the Rosetta Stone, or parallel examples to link one language to another. For non-human languages, no such key exists. This has made true, deep translation seem impossible, trapping us in what Raskin calls a "speak and spell level of communication"—simply recording sounds and playing them back without understanding their meaning or context.

The Anthropocentric Worldview: A deeper, more philosophical problem is humanity's ego and our ingrained belief that we are the center of the universe. Raskin argues that even if we solve technical problems like climate change by drawing down carbon, it won't fix the "core generator function" of human ego. He believes that proving other species have rich, symbolic cultures and communicating with them can fundamentally shift our perspective, fostering a new, more responsible relationship with nature.

How AI is Specifically Used to Solve These Problems

Raskin's approach leverages recent breakthroughs in AI, particularly Large Language Models (LLMs) and foundation models, to systematically break down these barriers.

1. Using AI for "Source Separation" to Clean Messy Data

To solve the data usability problem, ESP developed an AI model specifically for source separation.

Problem: Multiple animals (like whales or dogs) vocalize simultaneously, creating a single, jumbled audio track.

AI Solution: The AI is trained to take a mixed recording and distinguish the individual voices within it. It can then pull out clean, separate audio tracks for each animal.

Impact: This technology unlocks the 97% of previously unusable data, creating a massive new resource for analysis and dramatically expanding the potential for scientific discovery.

2. Building Geometric "Meaning Shapes" to Translate without a Rosetta Stone

The core of ESP's translation methodology is based on a foundational AI breakthrough from 2017: the ability to translate between human languages without prior examples.

The AI Process:

Turn Semantics into Geometry: The AI processes vast amounts of text (or any data) and learns the relationships between concepts (semantic relationships). It then represents these relationships as a geometric structure, often called a "latent space" or an "embedding." In this "shape," words with similar meanings are located close to each other. The relationship between "king," "man," "woman," and "queen" becomes a consistent vector in this multi-dimensional space.

Discover a Universal Shape: Researchers discovered that, counterintuitively, the "meaning shapes" for different human languages (like English, Spanish, and Japanese) are fundamentally the same. You can rotate one shape to align perfectly with another, mapping "dog" in English to "perro" in Spanish, for instance. This reveals a "universal human meaning shape".

Apply to Animal Communication: ESP's goal is to apply this same principle to animal communication. By analyzing massive datasets of animal vocalizations and behaviors, they aim to build a "meaning shape" for a given species. The hypothesis is that parts of this animal-specific shape will overlap with the universal human shape, allowing for direct translation of shared concepts (like grief, surprise, or self-awareness). The parts that don't overlap would represent concepts unique to that species' experience, which would be equally fascinating.

3. Creating Multimodal Models to Translate Beyond Audio

Raskin emphasizes that communication is more than just sound. ESP is building multimodal foundation models that integrate different data types to get a richer picture of meaning.

Problem: A sound's meaning is deeply connected to behavior and context. What does a specific whale call mean? Is it related to diving, socializing, or feeding?.

AI Solution: By using tags that record audio, video, and kinematics (how an animal moves, twists, and accelerates), the AI can learn to connect vocalizations with specific behaviors. It learns to translate motion into meaning. For example, the model could determine what sound is associated with a dive, or what movement corresponds to a "hello" vocalization.

Impact: This moves beyond simple audio analysis to understanding the full context of communication, creating a much more robust and accurate translation model.

4. Generating Synthetic Communication for Interactive Experiments

The final step is to test the AI's understanding through two-way communication.

Problem: The current state-of-the-art is just playing back recordings, which Raskin compares to a "speak and spell" toy—a static, non-interactive method.

AI Solution: ESP is using generative AI to create synthetic, fluent animal vocalizations. An AI can listen to a few seconds of a voice and then continue speaking in that voice, a technique now being applied to bird songs and whale calls.

The Goal: The ultimate goal is to move towards interactive AI-to-animal conversation. This would allow researchers to test hypotheses in real-time. For example, if the AI thinks a certain sound means "dive," it could generate that sound and see if the animal dives, thereby validating its understanding of context and meaning.

Raskin is clear about the profound ethical considerations, advocating for a "prime directive" or "Geneva Convention for cross-species communication" to avoid disrupting ancient animal cultures with this powerful new technology.

For your next step, we could explore the ethical frameworks Raskin proposes for this work and how they might apply to other fields of AI research discussed in the sources, such as AI for peace. Alternatively, we could analyze the "dual-use" nature of this technology, considering both its potential benefits for conservation and the risks of misuse, drawing parallels to the discussions on AI in military applications.

Wow, Tom! What a generous and fascinating gift you’ve given here. I’m gonna share it right on my own sub stack. You’ve used the methods to show the methods and I love that.!